Oberseminar "Mathematik des Maschinellen Lernens und Angewandte Analysis" -Albert Alcalde Zafra

Clustering in pure-attention hardmax transformers

| Datum: | 20.11.2024, 14:00 - 15:00 Uhr |

| Kategorie: | Veranstaltung |

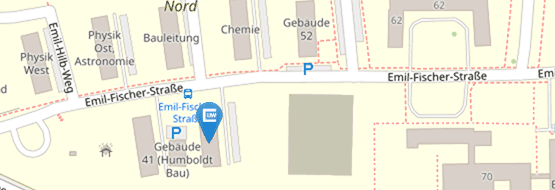

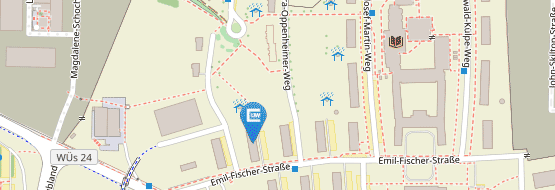

| Ort: | Hubland Nord, Geb. 40, 01.003 |

| Veranstalter: | Institut für Mathematik |

| Vortragende: | Albert Alcalde Zafra, FAU |

Transformers are extremely successful models in machine learning with poorly understood mathematical properties. In this talk, we rigorously characterize the asymptotic behavior of transformers with hardmax self-attention and normalization sublayers as the number of layers tends to infinity. By viewing such transformers as discrete-time dynamical systems describing the evolution of interacting particles in a Euclidean space, and thanks to a geometric interpretation of the self-attention mechanism based on hyperplane separation, we show that the transformer inputs asymptotically converge to a clustered equilibrium determined by special particles we call leaders. We leverage this theoretical understanding to solve a sentiment analysis problem from language processing using a fully interpretable transformer model, which effectively captures ‘context’ by clustering meaningless words around leader words carrying the most meaning.