Projekte

Project management:

Prof. Dr. Leon Bungert

E-Mail: leon.bungert@uni-wuerzburg.de

Project period: 2024-2027

Project description:

Geometric Methods for Adversarial Robustness (GeoMAR):

The vulnerability of deep learning-based systems to adversarial attacks and distribution shifts continues to pose a substantial security risk in real-world applications. Many approaches designed to improve the robustness of neural networks are only motivated heuristically, while their mathematical understanding as well as their precise robustification effect remain unclear. As a result, the majority of defenses that have been proposed in the past have been shown to be ineffective by subsequent third-party evaluations. One of the few truly robust defenses is Adversarial Training which is also backed by a developing mathematical theory. However, the method suffers from a trade-off between the generalization ability of the model on clean data and its robustness against adversarial attacks. While additional data can enhance the performance of adversarial training, current approaches to further improve robustness by scaling the number of training samples and the model size are prohibitively expensive and theoretically poorly understood.

In the GeoMAR project we aim to develop a mathematical theory for creating effective robustification approaches. Beyond theoretical insights, we plan to transfer the developed knowledge to practical algorithms. The key objectives of GeoMAR are to analyze the geometry of robustness, tackle the accuracy-robustness trade-off, analyze and compare the geometric properties of classifiers using a novel test-time approach, and reach scalability to large datasets.

To achieve this, we will view robustness through a geometric lens and model it as a geometric regularity property of the decision boundary of a classifier. We will use this framework to develop novel geometrically motivated robust training methods and solve them using tailored optimization methods. The robustness of these approaches will be quantified with novel test-time methods, and we shall scale them by leveraging generative models for the computation of attacks. The desired outcomes of GeoMAR are geometric, interpretable, and scalable training methods that provably mitigate the trade-off between accuracy and robustness. This way our project will promote the mathematical understanding of robustness in machine learning and generate efficient algorithms for training deep learning systems for real-world applications.

GeoMAR is funded by within the DFG Priority Program SPP 2298.

Current press release

Here you can find the link to the current press release of the university about our project.

Project management:

Prof. Dr. Leon Bungert

E-Mail: leon.bungert@uni-wuerzburg.de

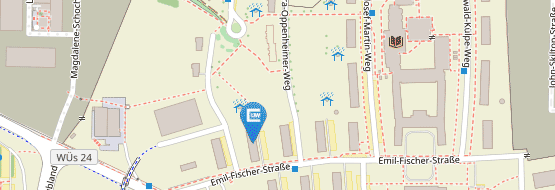

Web: www.mathematik.uni-wuerzburg.de/comfort/

Project period: 2024-2027

Project description:

Compression Methods for Robustness and Transferability (COMFORT):

Modern machine learning (ML) methods like image classifiers or generative models have the potential to revolutionize science, industry, and our society. To employ ML more widely and sustainably, it is vital to advance the development of compressed and resilient models suitable for a wide range of data and applications. A drawback of state-of-the-art methods is that training and inference with large neural networks require enormous computational resources and expert knowledge. This limits their public availability and causes a bad ecological footprint. While considerable progress has been made in designing sparse ML models through pruning or sparse regularization, most of these approaches view sparsity merely as a small number of model parameters. This simplistic point of view restricts the expressivity and applicability of the resulting models, which is why, in this project, we will focus on compressed models instead. Such models can be thought of as being sparse in a different domain, e.g., in the Fourier representation of convolutional neural networks.

Another shortcoming of ML models is their susceptibility to adversarial attacks (imperceptible input perturbations intended to fool ML), and their tendency to hallucinate in generative tasks. These issues hinder the usage of ML in safety-critical applications. To counteract this, substantial progress has been made based on data augmentation and robust optimization techniques. While it has been observed that such approaches trade off accuracy against robustness, the interplay with the compression of ML models is poorly understood.

Finally, most current approaches for sparsity or robustness are tailored to certain application scenarios and are hard to transfer to different data. Prominent examples of this are adversarial training, which specializes in image classification problems and works with data augmentations like noise or rotations, and sparse training for convolutional neural networks applied to imaging tasks.

In this project, we aim to achieve breakthroughs in developing compressed, flexible, and robust ML models for diverse datasets involving image, audio, and graph data. Along the way, our application-driven research program will also advance the mathematical understanding of learning at the interface of efficacy and resilience.

Our consortium bridges the gap between mathematical theory, computer science, and innovative applications. It encompasses five universities and research institutes in Germany. They will develop mathematical theory for the robustness of compressed ML models, design parsimonious graph learning architectures, develop efficient training and inference methods for compressed models, and analyze their robustness. Furthermore, they will work on compressed learning for audio and imaging data and as inverse problems and shall investigate the adversarial robustness of compressed models for audio data in speech applications. The consortium is completed by a tech start-up based in Munich that specializes in compression techniques, including pruning, quantization, and distillation for generic ML models with a wide range of applications and will be key for integrating the scientific advancements of the consortium into practice.