Oberseminar Wissenschaftliches Rechnen (Nadja Vater)

A Preconditioner for Least Squares Problems with Application to Neural Network Training

| Datum: | 15.07.2022, 12:00 - 12:30 Uhr |

| Kategorie: | Veranstaltung |

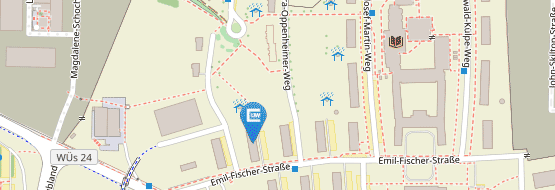

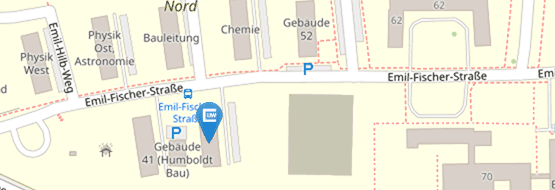

| Ort: | Hubland Nord, Geb. 30, 30.02.003 |

| Veranstalter: | Lehrstuhl für Mathematik IX (Wissenschaftliches Rechnen) |

| Vortragende: | Nadja Vater |

Abstract:

A preconditioned gradient descent scheme for a regularized nonlinear least squares problem arising from the aim to approximate given data by a neural network is presented.

Specifically, regularized over-parameterized nonlinear problems are considered, and the construction of a novel left preconditioner is illustrated. This preconditioner is based on randomized linear algebra techniques[1].

In this talk, theoretical and computational aspects of the proposed preconditioning scheme for a gradient method are discussed. Further, results of numerical experiments with the resulting preconditioned gradient applied to nonlinear least squares problems arising in neural network training are presented. These results demonstrate the effectiveness of preconditioning compared to a standard gradient descent method.

References

[1] N. Vater and A. Borzì. Preconditioned Gradient Descent for Data Approximation with Shallow Neural Networks.

International Conference on Machine Learning, Optimization and Data Science, 2022 (to appear).